In this blog post I'll cover how to use webjars in conjunction with sbt. The plan is to download bootstrap and maybe other web techniques via maven artefacts.

Motivation

I'm well aware that there are package managers for javascript, at least I've heard of them - not overly good things - but I heard of them. Chances are high that if you continue to read to the end of this post there has been a new one invented.

In my bubble, in the past - well, many years - I had to fiddle around with JVM de factor standard build systems like maven or gradle, and if i neglect countless hours of fustration with them I have to admit they served me well. As such, I'll stay with this ecosystem, at least I use it in creative ways. Well, sort of. I'll use sbt to download dependencies and build the example project, I hope I got your head spinning in the meantime.

In my bubble, in the past - well, many years - I had to fiddle around with JVM de factor standard build systems like maven or gradle, and if i neglect countless hours of fustration with them I have to admit they served me well. As such, I'll stay with this ecosystem, at least I use it in creative ways. Well, sort of. I'll use sbt to download dependencies and build the example project, I hope I got your head spinning in the meantime.

Reality chck: One thing I would advise to avoid is to have too many package managers in your corporate setting, it is a maintenance burden which gets multiplied by each new system. Naturally, every build system wants to outmatch the other ones, either in complexity or in the wealth of features it provides. (One advice: Sometimes it pays off to bet on the most conservative option, but I doubt you'll find such a thing in javascript land. 😉)

In some bright moments however, different worlds work together, and this blog post wants to shed a light on how to combine web techniques with tools used for corporate settings.

To put it bluntly, I try to download a jar file containing prepacked static content containing a bunch of javascript and other resources and put it at the right place - on demand.

For example, I want to use bootstrap in my project - just go to their website, download it, extract things you are interested in, move on?

Of course this is a valid - and most probably - the most used approach by a majority of developer teams, but it has some drawbacks.

First, it is unclear where such frameworks come from. It is also hard to tell 'afterwards' which file belongs to which framework. You are left to best guessing based on directory or zip file names. A concern might be the licensing situation - how to tell if a bunch of code maybe inflicts legal issues in your code? What about if you want to update a certain library - often you can be glad if you even know which version you currently use.

Of course you can document it, but odds are high that this documentation is not really in sync with your code, at least you have to check manually that this is the case. Another anti pattern which comes to mind is that third party code will be checked in your code repository, no matter how hard you fight it, by some innocent but mindless colleague. The best thing about is, that you'll surely find the same library downloaded and checked in multiple times, maybe also in different versions, open doors for subtle bugs, o my god, in PRODUCTION. Insane! Mind blowing! (Yes I play bass and yes I slap and yes I know davie504)

There has to be a reason why such a zoo of package managers exist, for each programming language several of them, along with their ecosystems, everybody fighting for the attention of the very confused poor Mr. Bob Developer.

As such, for all of the good reasons cited above, there is an excuse to automate it yet again and write a blog post about it, mainly for you who reads this with intense boredom waiting for the anticlimax which is yet to come.

Implementation

I'll continue to work on the artifact-dashboard project, and enhance version 0.4 by adding necessary commands to the sbt build file to download bootstrap.

Before reading on, maybe you want to inform yourself about webjars.org, whose mission statement is

WebJars are client-side web libraries (e.g. jQuery & Bootstrap) packaged into JAR (Java Archive) files.

This is exactly what we need, what I tried to convey above with my ranting about problems if you don't use any sort of package manager. Webjars.org is a really cool project for all JVM engineers who don't want to get their hands too dirty with front end development, stay cozy in their own world, not to touch those strange javascript package managers alltogether.

Besides, source code management is trivial, right?

Besides, source code management is trivial, right?

Don't worry, to download bootstrap via sbt is far less text than the rant above, but maybe more interesting.

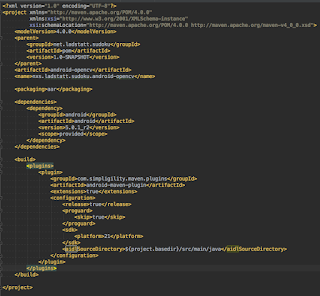

To download and unpack it, not much is necessary, witness it here:

enablePlugins(ScalaJSPlugin) name := "artifact-dashboard" scalaVersion := "2.12.10" // This is an application with a main method scalaJSUseMainModuleInitializer := true libraryDependencies ++= Seq("org.scala-js" %%% "scalajs-dom" % "1.0.0" , "org.webjars" % "bootstrap" % "4.4.1") // defines sbt name filters for unpacking val bootstrapMinJs: NameFilter = "**/bootstrap.min.js" val bootstrapMinCss: NameFilter = "**/bootstrap.min.css" val bootstrapFilters: NameFilter = bootstrapMinCss | bootstrapMinJs // magic to invoke unpacking stuff in the compile phase resourceGenerators in Compile += Def.task { val jar = (update in Compile).value .select(configurationFilter("compile")) .filter(_.name.contains("bootstrap")) .head val to = (target in Compile).value unpackjar(jar, to, bootstrapFilters) Seq.empty[File] }.taskValue // a helper function which unzips files defined in given namefilter // to a given directory, along with some reporting def unpackjar(jar: File, to: File, filter: NameFilter): File = { val files: Set[File] = IO.unzip(jar, to, filter) // print it out so we can see some progress on the sbt console println(s"Processing $jar and unzipping to $to") files foreach println jar }

This is part of v0.5 of the artifact-dashboard project, and this is the complete sbt file which is necessary to download a version of bootstrap - at the time of writing the most current one - unpacking it to the right place and make it available for the javascript code.

As usual, you only have to provide the correct paths in your html file to the javascript code, and you can profit from all the goodies bootstrap has to offer.

As a side note, I tweaked also a little bit how Scala.js is used, have a look in the source code / html file.

I think it is a nice combination of technologies, don't know if it is popular, at least it works for me.

Using bootstrap, our download page looks far better, users will surely be more willing to click on a download link than ever before.

Here is a little screenshot of the current state of affairs with v0.5 of artifact dashboard in action for my test instance:

As usual, you only have to provide the correct paths in your html file to the javascript code, and you can profit from all the goodies bootstrap has to offer.

As a side note, I tweaked also a little bit how Scala.js is used, have a look in the source code / html file.

I think it is a nice combination of technologies, don't know if it is popular, at least it works for me.

Using bootstrap, our download page looks far better, users will surely be more willing to click on a download link than ever before.

Here is a little screenshot of the current state of affairs with v0.5 of artifact dashboard in action for my test instance:

|

| Screenshot of Artifact Dashboard v0.5 |

Thanks for reading.

![[High Street, Guildford, England] (LOC)](https://farm9.staticflickr.com/8109/8621263447_f3bf188d5b.jpg)