JMH is a tool to do micro benchmarking on the JVM and on this weekend I used it to do some testing for some performance hotspots in my personal projects.

The JMH landing page is pretty much self explanatory, there is a a samples page provided, too.

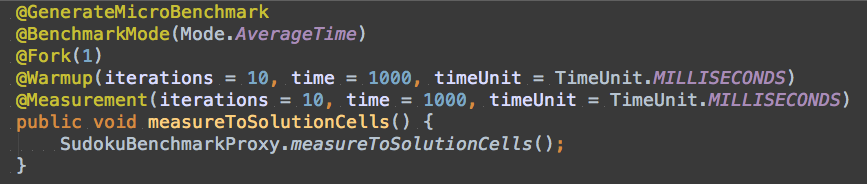

The only issue I had with JMH is that I couldn't get the Scala methods to be picked up by the @GenerateMicroBenchmark run. I did a little workaround by creating a Java class which then delegates to the scala implementations I wanted to test - this works well enough for me.

The above screenshot shows the general idea - The Java code above calls a Scala object which gathers all performance testing related code. In the example above, the SudokuBenchmarkProxy object is also the right place to setup the environment for the performance test to run in.

Update: Those problems had to do with my project setup. I have a multimodule maven build and the benchmarks are located in a submodule. It seems that at the moment the wrapper code in target/generated-sources/jmh is not generated when run in multimodule scenario, but only when called directly where the pom with the jmh plugin is defined.

There is even a maven scala archetype for JMH available which provides the basic setup, which I used for the initial project setup. The scala archetype (0.5.6), invocated like this:

will produce a configuration like this:

This runs fine when it is used on it's own, but if you want to use it in a submodule, change it to this:

After this, JMH will create the wrapper code also for multimodule maven builds. At least it solved the issue for me.

Update 2: As it turns out, this issue is already been worked on, and was only recently discussed on the mailing list.

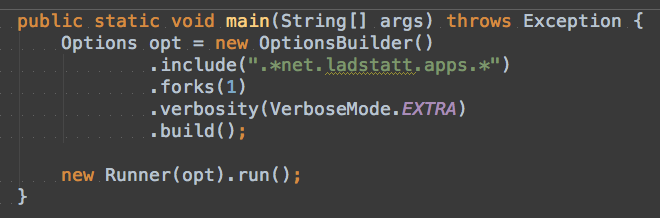

However, the most pleasant mode to develop / debug / work on your performance bottlenecks with JMH is to use it's API. Like this you are able to directly call it, for example like this:

This executes JMH on your code, using the regex pattern to search for annotated methods. The run() method even returns the collected data, like such you could "easily" set up an automated performance regression testing system I suppose.

JMH by default gives you a jar file called "microbenchmarks.jar" which contains all dependencies (as defined by maven). Like this it is rather trivial to execute the tests on different machines.

Speaking of this one has to underline that performance testing is hard - the hardest part of it is to create comparable environments you run your tests run in. But this is trivial (?) compared with understanding the inner workings of the JVM - JMH hides much of this complexity and gives you here a head start!

Moreover, micro benchmarking is a science in itself. Make sure you are repairing the "right" part of your application. It only pays off if you hit the big points, and such points are more often than not buried in "management processes".

Micro benchmarking maybe implies micro optimizations which often have the side effect of obfuscating your code base - and without a regression test harness built around those parts of your application it is likely that the next clean code developer just "repairs" the app back to the start. ;)

But if need be and you _have_ to squeeze everything out of your jvm(s), JMH will be a great addition to your toolset.

If you want to know more, ask this guy.

The JMH landing page is pretty much self explanatory, there is a a samples page provided, too.

|

| methods can be annotated which configure JMH |

Update: Those problems had to do with my project setup. I have a multimodule maven build and the benchmarks are located in a submodule. It seems that at the moment the wrapper code in target/generated-sources/jmh is not generated when run in multimodule scenario, but only when called directly where the pom with the jmh plugin is defined.

There is even a maven scala archetype for JMH available which provides the basic setup, which I used for the initial project setup. The scala archetype (0.5.6), invocated like this:

will produce a configuration like this:

This runs fine when it is used on it's own, but if you want to use it in a submodule, change it to this:

After this, JMH will create the wrapper code also for multimodule maven builds. At least it solved the issue for me.

Update 2: As it turns out, this issue is already been worked on, and was only recently discussed on the mailing list.

However, the most pleasant mode to develop / debug / work on your performance bottlenecks with JMH is to use it's API. Like this you are able to directly call it, for example like this:

This executes JMH on your code, using the regex pattern to search for annotated methods. The run() method even returns the collected data, like such you could "easily" set up an automated performance regression testing system I suppose.

JMH by default gives you a jar file called "microbenchmarks.jar" which contains all dependencies (as defined by maven). Like this it is rather trivial to execute the tests on different machines.

Speaking of this one has to underline that performance testing is hard - the hardest part of it is to create comparable environments you run your tests run in. But this is trivial (?) compared with understanding the inner workings of the JVM - JMH hides much of this complexity and gives you here a head start!

Moreover, micro benchmarking is a science in itself. Make sure you are repairing the "right" part of your application. It only pays off if you hit the big points, and such points are more often than not buried in "management processes".

|

| Micro benchmarking - a science in itself |

Micro benchmarking maybe implies micro optimizations which often have the side effect of obfuscating your code base - and without a regression test harness built around those parts of your application it is likely that the next clean code developer just "repairs" the app back to the start. ;)

But if need be and you _have_ to squeeze everything out of your jvm(s), JMH will be a great addition to your toolset.

If you want to know more, ask this guy.